Installing Ollama with Docker to Deploy Local Large Models and Connect to One-API

Publish: 2024-08-18 | Modify: 2024-08-18

Ollama is an open-source tool that allows users to conveniently run various large open-source models locally, including Tsinghua University's ChatGLM, Alibaba's Qwen, and Meta's Llama, among others. Currently, Ollama is compatible with the three major operating systems: macOS, Linux, and Windows. This article will introduce how to install Ollama via Docker and deploy it to use local large models, while also connecting to one-api for easy access to the required large language models through an API interface.

Hardware Configuration

Due to the high hardware requirements of large models, the higher the machine configuration, the better; having a dedicated graphics card is even better, and it is recommended to start with 32GB of RAM. The author has deployed it on a dedicated server with the following configuration:

- CPU: E5-2696 v2

- RAM: 64G

- Disk: 512G SSD

- Graphics Card: None

Note: My dedicated server does not have a graphics card, so it can only run on CPU.

Installing Ollama with Docker

Ollama now supports Docker installation, greatly simplifying the deployment difficulty for server users. Here, we will use the docker compose tool to run Ollama. First, create a new docker-compose.yaml file with the following content:

version: '3'

services:

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434"

volumes:

- ./data:/root/.ollama

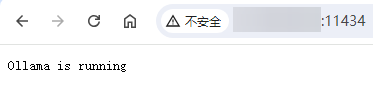

restart: alwaysThen, enter the command docker compose up -d or docker-compose up -d to run it. After running, access: http://IP:11434, and if you see the message Ollama is running, it indicates success, as shown in the image below:

If your machine supports GPU, you can add GPU parameter support. Refer to: https://hub.docker.com/r/ollama/ollama

Using Ollama to Deploy Large Models

After installing Ollama, you need to download large models. Supported large models can be found on the Ollama official website: https://ollama.com/library. Ollama does not provide a web interface by default and needs to be used via the command line. First, enter the container with the command:

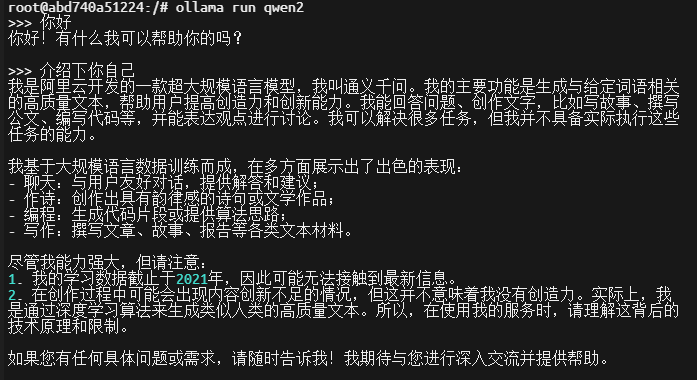

docker exec -it ollama /bin/bashOnce inside the container, go to the official website to find the large model you want to download. For example, to download Alibaba's Qwen2 model, use the command:

ollama run qwen2Once the model is downloaded and running, you can have a conversation via the command line, as shown in the image below:

Common Ollama Commands

Here are some common Ollama commands:

- Run a specified large model:

ollama run llama3:8b-text - View the list of local large models:

ollama list - View running large models:

ollama ps - Delete a specified local large model:

ollama rm llama3:8b-text

Tip: More commands can also be viewed by entering

ollama -h.

Large Model Experience

Currently, I have downloaded the llama2/qwen2/glm4/llama3/phi3 large models for a simple user experience, and I have come to some possibly not very rigorous and accurate impressions:

- The

llamamodel is not friendly to Chinese (understandable, as it is a foreign model). phi3:3.8b, a small model launched by Microsoft, supports multiple languages. In practice,3.8bseems quite limited; perhaps the model parameters are too few. I wonder if increasing it to14bwould improve things.glm4/qwen2is more friendly to Chinese.- Smaller model parameters tend to be less capable; models starting from

7band above can generally understand and converse normally, while smaller models often make mistakes. - With the above configuration, running a

7bmodel on pure CPU is slightly slow.

Connecting Ollama to one-api

One-api is an open-source AI middleware service that can aggregate APIs from various large models, such as OpenAI, ChatGLM, and Wenxin Yiyan, providing a unified OpenAI calling method. For example, the API calling methods for ChatGLM and Wenxin Yiyan are different; one-api can integrate them and provide a unified OpenAI calling method, allowing you to simply change the model name when calling, thus eliminating interface differences and reducing development difficulty.

For specific installation methods for one-api, please refer to the official project address: https://github.com/songquanpeng/one-api

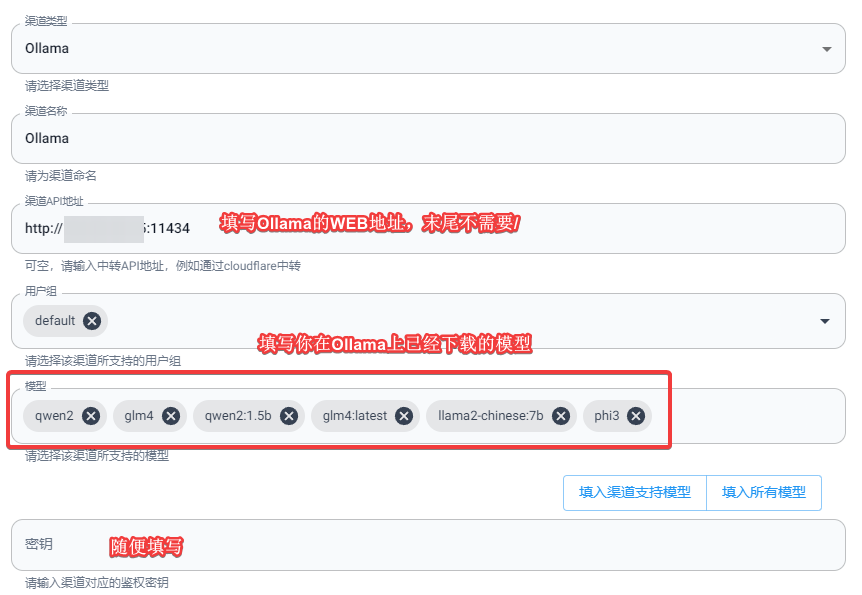

Through one-api backend >> Channels >> Add a new channel:

- Type: Ollama

- Channel API address: Fill in the Ollama WEB address, e.g.,

http://IP:11434 - Model: The name of the local large model you have downloaded on Ollama

- Key: This is a required field; since Ollama does not support authentication access by default, you can fill it in casually.

As shown in the image below:

After connecting, we can request one-api and pass the specific model name for testing, using the following command:

curl https://ai.xxx.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer sk-xxx" \

-d '{

"model": "qwen2",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'- Change

ai.xxx.comto your one-api domain. - Fill in

sk-xxxwith the token you created in one-api.

If the call is successful, it indicates that Ollama has been successfully connected to one-api.

Encountered Issues

The author attempted to call one-api Ollama using the stream method but received a blank response. Through issues, it was discovered that it was a bug in one-api. Currently, downgrading the one-api version to 0.6.6 solves the issue, and we look forward to the author fixing this problem in the future.

Security Risks

Since Ollama itself does not provide an authentication access mechanism, deploying Ollama on a server poses security risks. Anyone who knows your IP and port can make API calls, which is very unsafe. In a production environment, we can take some measures to improve security.

Method 1: Linux Built-in Firewall

- Change the Docker deployment of Ollama to HOST network.

- Use the Linux built-in firewall to restrict access to port 11434 to specified IPs only.

Method 2: Nginx Reverse Proxy

- Change the mapped IP of the Docker deployment of Ollama to

127.0.0.1. - Then use Nginx on the local machine to reverse proxy

127.0.0.1:11434, and set up blacklists (deny) and whitelists (allow) on Nginx.

Conclusion

As an open-source tool, Ollama provides users with a convenient way to deploy and call large models locally. Its excellent compatibility and flexibility make it easier to run large-scale language models across various operating systems. Through Docker installation and deployment, users can quickly get started and flexibly use various large models, providing strong support for development and research. However, due to the lack of a built-in authentication access mechanism, users should take appropriate security measures in production environments to prevent potential access risks. Overall, Ollama has significant practical value in promoting the application and development of local AI models. If it can improve its authentication mechanism in the future, it will undoubtedly become a powerful assistant for AI developers.

Some content in this article refers to:

- Ollama official website: https://ollama.com/

- Ollama GitHub project address: https://github.com/ollama/ollama

- Ollama Docker Hub: https://hub.docker.com/r/ollama/ollama

- one-api: https://github.com/songquanpeng/one-api

Comments

xiaoz

I come from China and I am a freelancer. I specialize in Linux operations, PHP, Golang, and front-end development. I have developed open-source projects such as Zdir, ImgURL, CCAA, and OneNav.

Random article

- Deploying Open Source Music Streaming Service Navidrome with Docker: Creating Your Own Cloud Music

- Google Cloud Free Trial: Get $300 for New User Registration

- Youpaiyun + ShareX = Create Your Own Image Hosting Tool

- Using Qiniu Cloud Storage to Host Images in Typecho without Plugins

- OneNav Bookmark Manager Updated to 0.9.11, Supports One-Click Deployment on Baota (Benefits at the End of the Article)

- Building Network Monitoring with PHP Server Monitor

- Improper IP Verification in WordPress and Arbitrary Directory Traversal Vulnerability in Plugin Update Module

- Guide to Closing a Statement Savings Account (SSA) with OCBC Bank Singapore

- Please use WiFi Master Key with caution

- Guide to Installing NAS Edition Thunderbolt in Docker Environment