Sharing the Process of Migrating a Million-Data Website (imgurl.org)

Publish: 2022-03-30 | Modify: 2022-03-30

Background

imgurl.org is a image hosting website operated by xiaoz since December 2017, hereinafter referred to as ImgURL. During its operation, ImgURL has undergone several migrations, but at that time the data was not large, so it was not difficult. As time went on, the amount of data increased, and as of March 29, 2022, there were already over 1,176,457 images.

Currently, the server's disk IO pressure is relatively high, mainly from MySQL read and write pressure and image processing pressure (such as image cropping, compression, etc.). At this stage, ImgURL is hosted on Psychz, but due to the poor IO performance of Psychz's mechanical hard drive, it is planned to migrate from Psychz's dedicated server to Kimsufi's dedicated server. This article is a record and sharing of the migration process.

Website Structure

ImgURL mainly consists of three parts: program (PHP), database (MySQL), and external storage. The external data is divided into four parts:

- Local

- Backblaze B2 (no need to consider migration in the cloud)

- FTP

- Self-built minio (S3)

Note: All local, FTP, and minio data are on Psychz.

Among them, the FTP and minio data are the largest:

- FTP: 188GB (number of files not counted)

- Minio data: 154GB, 670,000 objects

Database Migration

Although the MySQL data has reached millions of rows, the overall database is not very large, and it is basically a standard import and export operation.

First, export the MySQL database:

mysqldump -uxxx -pxxx imgurl>imgurl.sqlThe entire SQL file exported is only 525MB. Then use the scp command to copy it for migration (omitted here).

Next is to import the database:

mysql -u imgurl -p imgurl<imgurl.sqlThe database migration step is completed quickly.

Note: It is not recommended to use the MySQL source command for import, because the source command will print every line of operation, and printing millions of data lines one by one will drive you crazy.

Migration of Website Data

The rsync command is used to migrate the website:

rsync -aqpogt -e 'ssh -p xxx' root@IP:/xxx /xxxThe meanings of the parameters are as follows:

- -a, --archive: archive mode, which means to transfer files recursively and preserve all file attributes.

- -q, --quiet: quiet mode. This is important because if there are many files, not using quiet mode will output a lot of files.

- -p, --perms: preserve file permissions.

- -o, --owner: preserve file owner information.

- -g, --group: preserve file group information.

- -t, --times: preserve file time information.

-e 'ssh -p xxx': specify the SSH port because I used a non-standard SSH port (22).

Since this part of the website program is not very large, it didn't take too long.

FTP Data Migration

Since the FTP data has reached 188GB, it is not considered large, but there are a lot of small files. This time, the rsync command is still used to migrate the FTP data. However, before the migration, it is better to use the screen command to keep the task running in the background to avoid interruption of the window task due to long time.

# Create a new screen session

screen -S xxx

# Use the rsync command to migrate

rsync -aqpogt -e 'ssh -p xxx' root@IP:/xxx /xxxThe important thing here is to run the task in the background using the screen command or other methods, after all, there are many small files to avoid interruption. The whole process took several hours, and I forgot how long exactly.

The Most Troublesome Part: Minio Data Migration

xiaoz uses the single-node version of minio, not the minio cluster. It seems that the data of the single-node version is directly stored on the disk as the source file, so the simplest way is to synchronize the source files directly using the rsync command, but it is not clear whether there are any unknown risks in doing so. Therefore, another relatively safe approach is used, using the rclone sync command to synchronize.

I have already configured the minio information on both sides in advance on rclone (omitted steps), named psychz_s3 and kimsufi_s3. At first, I used the command to migrate the data in the 2021 bucket:

rclone sync -P psychz_s3:/imgurl/imgs/2021 kimsufi_s3:/imgurl/imgs/2021It took 13 hours and there were many errors, with the error message:

The Content-Md5 you specified did not match what we received.

The number of error files reached 55,269, which means that 55,269 files failed to migrate due to failed MD5 verification. The reason for this error should be that I directly modified the source files of minio using non-standard operations before (the source files of the minio single-node version can be directly viewed and modified, and I directly compressed these image source files before, causing the MD5 to change). Due to the large number of files, combined with the poor IO of Psychz, rclone spent a lot of time scanning.

Then continue to migrate the remaining data. This time I became smarter and optimized the command as follows:

rclone sync --s3-upload-cutoff 0 --tpslimit 10 --ignore-checksum --size-only -P psychz_s3:/imgurl/imgs/2022/03 kimsufi_s3:/imgurl/imgs/2022/03--s3-upload-cutoff 0

For small objects that were not uploaded as multipart uploads (the object size is as follows if using rclone to upload with --s3-upload-cutoff), rclone uses the ETag header as the MD5 checksum.

However, for objects uploaded as multipart uploads or with server-side encryption (SSE-AWS or SSE-C), the ETag header is no longer the MD5 sum of the data. Therefore, rclone adds an additional metadata X-Amz-Meta-Md5chksum, which is a base64-encoded MD5 hash (the same format as required by Content-MD5).

For large objects, calculating this hash may take some time, so you can disable adding this hash with --s3-disable-checksum. This means that these objects do not have an MD5 checksum.

Note that reading it from the object requires an additional HEAD request since the metadata is not returned in the object listing.

After reading the official description, I still don't quite understand the specific meaning of the --s3-upload-cutoff parameter.

--tpslimit 10

The effect of this parameter is described by the official as follows:

This limits the transfer rate in transactions per second. It can be useful to limit the rate of transactions made to the S3 backend.

--ignore-checksum: Ignore hash verification (MD5). This parameter is useful for a large number of small files, but it may reduce reliability.--size-only: Only verify the size of the file.

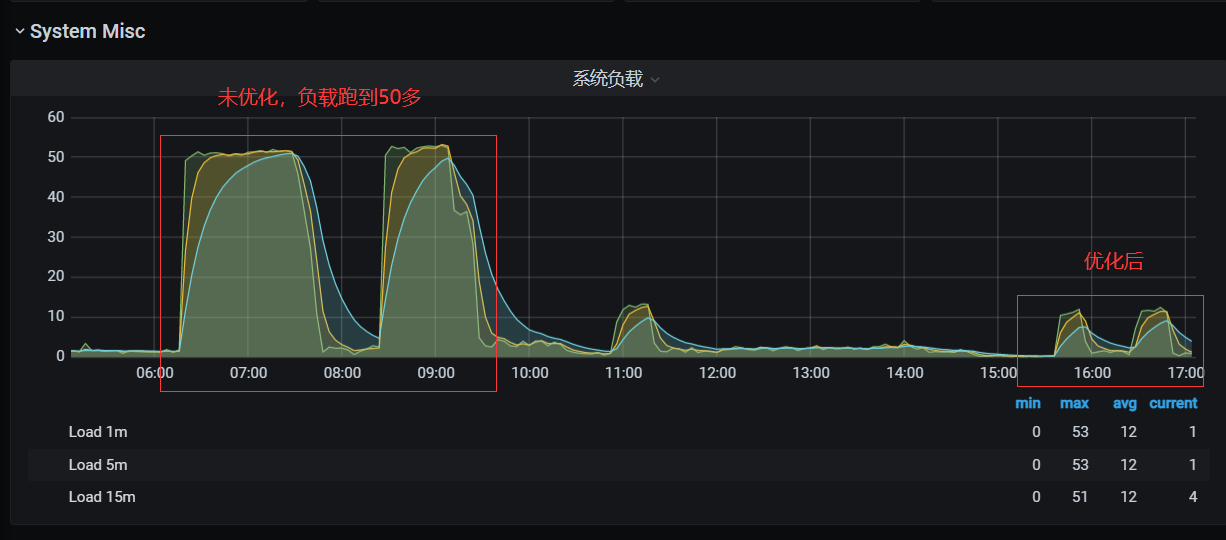

After monitoring and observation, the effect of the command before and after optimization is significant, and the system load also decreases linearly (because the scanning should be reduced).

With 154GB of data and 670,000 objects, it took more than 30 hours to synchronize the minio data using rclone sync. There are probably many other optimization parameters for rclone. Interested friends can study the rclone official documentation.

Summary

As of March 30, 2022, https://imgurl.org/ has been successfully migrated from Psychz to Kimsufi. The whole process was not difficult, but the rclone sync synchronization of minio data took too much time. The summary is as follows:

- It is not recommended to use the

sourcecommand of MySQL for import. It is recommended to use the following method:mysql -u imgurl -p imgurl<imgurl.sql. - If a task may take a very long time, be sure to use the

screencommand or similar methods to keep the task running in the background to avoid interruption of the task due to the window being closed. rclone synccan improve efficiency through a series of optimization parameters, such as ignoring hash verification--ignore-checksum.- For local file migration in Linux, it is recommended to use

rsync, which is simple and efficient.

Comments

xiaoz

I come from China and I am a freelancer. I specialize in Linux operations, PHP, Golang, and front-end development. I have developed open-source projects such as Zdir, ImgURL, CCAA, and OneNav.

Random article

- OneNav 1.0.0 Official Release: New Batch Link Checking Feature

- Automatically Compress Images in Bulk Using Shell Script in Linux Environment

- 115 Network Disk 618 Limited Time Promotion: Only 500 Yuan for 4-Year VIP Service

- DokuWiki Pseudo-Static Configuration for Nginx

- Youpaiyun + ShareX = Create Your Own Image Hosting Tool

- ImgURL (www.imgurl.org) User Function Online, Register and Get 500 Free Monthly Quota

- Wild Grass Cloud 2024 Spring Special Offer: Hong Kong Cloud Servers as low as 88 yuan/year

- CSS Implementation of Gradient Color, Left to Right, Top to Bottom

- Powerful and user-friendly PHP integrated development environment Laragon

- Installing fail2ban + Firewalld on CentOS 7 to prevent brute force and CC attacks